By Claudia Neuhauser, PhD, Vice President for Research, Professor of Mathematics, University of Houston; Brian Herman, PhD, Professor, Department of Biomedical Engineering and former Vice President for Research, University of Minnesota and University of Texas Health, San Antonio

Winston, the main character in George Orwell’s 1984, works in the Ministry of Truth. One of the main functions of the Ministry of Truth is to falsify the past to ensure “the stability of the regime.” Winston explains the need to continuously rewrite the past as follows:

“The alteration of the past is necessary for two reasons, one of which is subsidiary and, so to speak, precautionary. The subsidiary reason is that the Party member, like the proletarian, tolerates present-day conditions partly because he has no standards of comparison. He must be cut off from the past, just as he must be cut off from foreign countries, because it is necessary for him to believe that he is better off than his ancestors and that the average level of material comfort is constantly rising. But by far the more important reason for the readjustment of the past is the need to safeguard the infallibility of the Party. It is not merely that speeches, statistics, and records of every kind must be constantly brought up to date in order to show that the predictions of the Party were in all cases right. It is also that no change in doctrine or in political alignment can ever be admitted.”

Today’s AI and social media companies might be turning into today’s Ministry of Truth, although not because the past needs to be corrected for the sake of stability but because AI inserts itself actively into what we write, which is then available to train Large Language Models (LLMs).

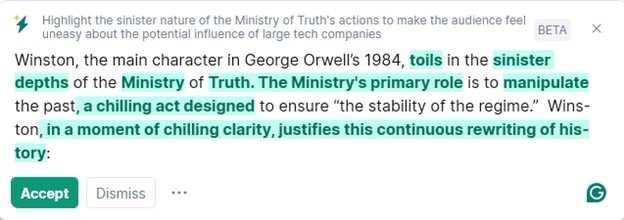

We experienced this active interference firsthand when we wrote this blog: The potential influence on truth by the large tech companies prompted Grammarly to ask us to rewrite the first paragraph. It gave a justification, namely that we should “[h]ighlight the sinister nature of the Ministry of Truth’s actions to make the audience feel uneasy about the potential influence of large tech companies”:

Grammarly inserted itself to shape the tone of this blog. We dismissed its suggestion since it wasn’t our intent “to make the audience feel uneasy.” But it shows how AI can influence what people write and, hence, what people read, which, in turn, may be used to train future LLMs. Even worse, social media algorithms boost content not based on accuracy but based on engagement to feed their business model. This all contributes to a distorted online reality. Future LLMs’ responses that are based on what is available online can thus shift how people perceive the world. We call this shift in perception “reality drift.”

//

Pollution adds to reality drift

The promise of AI is huge, but the dangers of misuse are vast. Bias, surveillance, data privacy, lack of sufficient oversight, virtual environments, cybersecurity issues, even the potential of sentience, and the essence of human nature itself all require ethical applications of AI to enhance rather than harm our collective civilization. At its core, and for AI to benefit society, we must know that the output of AI algorithms is accurate and truthful.

With the enormous amount of information available online, we can now pick whatever piece of information supports whatever point we want to make. It is difficult for the layperson to identify what the consensus knowledge is. Depending on where one tries to find information, it is easy to get a distorted view of the truth and, hence, reality. With information being readily shared online and outrageous claims often shared even more than the boring truth, inaccurate information will find its way back onto the internet and pollute the corpus that is used to train AI platforms even further.

Even academic research is getting overwhelmed with questionable knowledge. Katharine Sanderson reported in Nature about the problem of “[p]oor-quality studies […] polluting the literature.” She quoted a study that “indicate[d] that some 2% of all scientific papers published in 2022 resemble paper-mill productions.” Generative AI will only make this problem worse. Not only will it be easier to produce fake papers, but fake papers will also be used to train the models, which will be used to churn out more fake papers. The reliability of research decreases, with consequences well beyond the existence of fake papers in peer-reviewed research journals.

The risk to the population is particularly great in medicine, where research results are often disseminated widely, and patients are eager to find solutions to their problems, particularly if the medical establishment cannot provide the relief the patients are seeking. We saw this during the pandemic when patients were eager to follow questionable treatments that spread through social media. Social media’s influence on medical treatment continues to date, with millions of people following, at times, harmful advice from people with no medical training.

//

Malicious actors accelerate reality drift

Malicious actors can accelerate how the data drifts and, hence, what AI platforms present to the users. LLMs don’t have a mechanism to reinforce the truth. Historical accounts can be shaded or suppressed depending on how the corpus grows and how LLMs access the data to form responses. A malicious actor can plant and spread false information that could become the dominant voice over time. There is no active curation that removes inaccurate information. While grossly wrong statements might be discovered and perhaps even removed, it is more difficult to find slightly wrong statements that may remain and lead to a drift in what we consider the truth.

Autocratic systems, where the government has near complete control over what the population can see, are particularly prone to manipulating information. This is already happening: Yuan wrote in the New York Times about the erasure of large chunks of information posted on social media sites, blogs, and other portals on China’s internet between 1995 and 2005.

However, malicious actors can cause the data to drift even in democratic societies because of the openness of the internet. Malicious actors can disguise their identity and attract large numbers of followers who repost and spread falsehoods even further. Axios reported that “fake news outlets [are] outnumber[ing] real outlets” and that “[a]t least 1,265 websites are being backed by dark money or intentionally masquerading as local news sites for political purposes, according to NewsGuard, a misinformation tracking site.”

//

Algorithms drive reality

Social media algorithms are instrumental in how a statement is disseminated. But instead of ensuring the veracity of the statements that are disseminated, algorithms optimize user engagement. What a user sees depends on what they have engaged with in the past. As a statement is disseminated and amplified, it may change its message depending on the group it is shared with. The same message may end up in different groups with little interaction among the groups. This is like how genetic drift in isolated populations can lead to speciation. This analog with speciation can be pushed further. Just like species go extinct along one evolutionary trajectory but survive along another, the “offspring” of a root statement may die out in one dissemination network but survive in a different dissemination network, perhaps changed significantly from the original meaning of the statement.

The corpus of information thus resembles the evolution of species. Each statement has a life of its own, with many statements dying out and others evolving into statements with a meaning different from their ancestors. Mutations and selection thus determine the corpus on which LLMs are trained at any given moment in time. With sufficient time elapsed, retraining an LLM may result in a quite different model unless we can figure out how to keep reality from drifting further and further from the truth.

//

Curation is key to establishing reality, but it is not enough

In the sciences, we keep reality from drifting because the scientific corpus is constantly curated. Scientists regularly review what is accepted knowledge and reinforce it if it is still considered to be an accurate depiction of how scientists view reality. New information undergoes a rigorous process of peer review before it is added to the corpus of accepted knowledge.

Careful curation isn’t limited to scientific knowledge. The daily news cycle used to undergo careful curation in newsrooms by journalists who were well-trained in fact-checking. Rigorous editorial processes added to the veracity of the content that the media distributed. This all changed with the advent of social media, where anyone can post anything without paying any attention to truth and reality.

It is not that social media companies are not curating content. They spend a fair amount of time and effort coming up with ways to select content. The focus of their curation, however, is not on truth. Instead, their goal is to boost user engagement to feed their business model.

//

The dangers of curating to boost user engagement

Social media companies’ business model to boost user engagement regardless of the truth promotes disinformation and misinformation and thus promotes reality drift. Renée DiResta, a former research director on the now discontinued Election Integrity Partnership at the Stanford Internet Observatory and one of the researchers whose contract at the Stanford Internet Observatory was not renewed, wrote in her new book “Invisible Rulers: The People Who Turn Lies into Reality” about how social media has “enable[d] bespoke realities.” She explains that “[t]he collision of the propaganda machine and the rumor mill gave rise to a choose-your-own-adventure epistemology.” She contrasts “bespoke reality” with “consensus reality”: “Whereas consensus reality once required some deliberation with a broad swath of others, with a shared epistemology to bridge points of disagreement, bespoke reality comfortably supports a complete exit from that process.”

DiResta’s book is full of examples of people making up stories that go viral and are shared by millions of people. The creation of fake stories is very easy with the latest AI technology, according to DiResta: “Generative AI enables us to manufacture unreality: to produce images from worlds that don’t exist, audio that speakers never said, and videos of events that didn’t happen.”

Because of political pressure and lawsuits, few researchers are left to study disinformation and misinformation on social media. As reported by the Washington Post, in “Stanford’s top disinformation research group collapses under pressure,” Stanford has already paid millions in legal fees, and “[s]tudents and scholars affiliated with the [Stanford Internet Observatory] say they have been worn down by online attacks and harassment amid the heated political climate for misinformation research, as legislators threaten to cut federal funding to universities studying propaganda.”

//

People rely on people to make up their minds

In her book, DiResta quoted two very influential social scientists, Katz and Lzarsfeld, about how people form opinions: “The one source of influence that seemed to be far ahead of all the others in determining the way people made up their minds was personal influence.” Katz and Lzarsfeld wrote their book “Personal Influence” in 1955. Although this book is nearly 70 years old, their major conclusion about the importance of personal influence is still valid.

Over the years, trust in institutions and experts has declined, and people rely more than ever on their personal networks to get information. These networks are now social media channels, dominated by a small number of influencers who have figured out how to work the algorithms to boost their influence, income, and stardom. Some of them are mega influencers with over a million followers. These influencers are trusted by their followers, and some influencers are exploiting this trust and have no qualms about rewriting history or making up facts.

Human behavior has created today’s “Ministry of Truth,” where unscrupulous influencers can make a living by gaining the trust of their followers and spreading sensationalistic and false stories that grab audiences to the detriment of our society. It is unlikely that followers will take the time to fact-check what they read, and they may not even be able to ascertain the truth of a statement. They may also not care enough whether what they read is true and accurate. As long as it comes from someone they trust and it sounds reasonable to them, they might take the easy way out and believe it. Unless we hold social media companies accountable and require them to change their algorithms to curb the spread of disinformation and misinformation, conspiracy theories and disinformation will continue to spread, and we will drift further and further away from a consensus reality.